Python + scikit-learn is the must-have tool for Data Scientist’s daily work. Spark for data processing and ML model training You can create it directly from the page of the active Kinesis Data Stream.ģ. Setting up a Kinesis Firehose delivery stream as the consumer for Kinesis Stream is straightforward. The Kinesis Firehose is one consumer in our data pipeline because we would like to deliver the raw data into S3 (for the purpose of backups as well as creating our training dataset). Refer to the diagram in AWS documentation for Kinesis Data Streams High-Level Architecture. The consumers are essentially the applications you will build to process or analyze the streaming data. Now with the Tweepy Streaming as the producer to our Kinesis Stream, we will have the consumers on the other side to consume the data in the Stream. A Kinesis data stream stores records from 24 hours by default, up to 8760 hours (365 days) according to AWS documentation. The time period from when a record is added to when it is no longer accessible is called the retention period. You should estimate the input and output data rate to choose a proper number of shards More shards mean higher throughput capacity. There are two important points to consider when creating a data stream: Usually, you can have some simple processing on the coming tweets (e.g., what fields to keep) in this step meanwhile you may not want to alter the raw data too much (i.e., keep the raw data is a good practice).Ĭreate a Kinesis data stream in AWS.

TOOLS FOR DATA ANALYSIS FOR TWEETS CODE

The complete code can be found on GitHub. In this way, we have the producer in our streaming pipeline. An important step is to overload the “ on_data” method in the “ StreamListener” class so we can put the tweets into Kinesis Data Stream. Otherwise, using the Tweepy streaming API is straightforward. You will need to apply for a Twitter Developer account in order to set up the Twitter streaming. (2) The streaming will be used to demonstrate the real-time analysis. (1) Create the dataset for the ML model training purpose.

To collect tweets in real time is the very first step for two purposes:

Collect tweets from the Twitter Streaming API Using Python Note: We will use QuickSight to connect to Athena to build a dashboard for visualization.ġ. QuickSight: a cloud-scale business intelligence (BI) service that you can use to deliver easy-to-understand insights.Note: we will use Athena to access the processed tweets that have been saved in S3. Athena: a serverless, interactive query service to query data and analyze big data in Amazon S3 using standard SQL.Note: we use EMR to run Spark for data processing and model training, in a distributed fashion. EMR: service that utilizes a hosted Hadoop framework running on the Amazon EC2 (computing instance) and Amazon S3.Note: We use a Kinesis Firehose deliver stream as a consumer of the Kinesis Data Stream, and save the raw tweets into the S3 directly. Kinesis Firehose: service to deliver real-time streaming data to destinations such as Amazon S3, Redshift, ElasticSearch, etc.Note: the Tweepy streaming will be the producer that will put data into the Kinesis Data Stream. Kinesis Data Streams: streaming service to collect and process large streams of data record in real time.We also use Spark to process the Streaming, for model scoring/inference. Note: we will use Spark’s machine learning library to train an LDA (Latent Dirichlet Allocation) model for topic modelling, which will give you a quick taste of ML model training in a distributed system. TextBlob to do simple sentiment analysis on tweets (demo purpose only).Tweepy to create a stream and listen to the live tweets.(2) In the end, we will also build a dashboard for visualization. With the sentiment analysis, we can also catch a glimpse of the public attitudes towards this field.

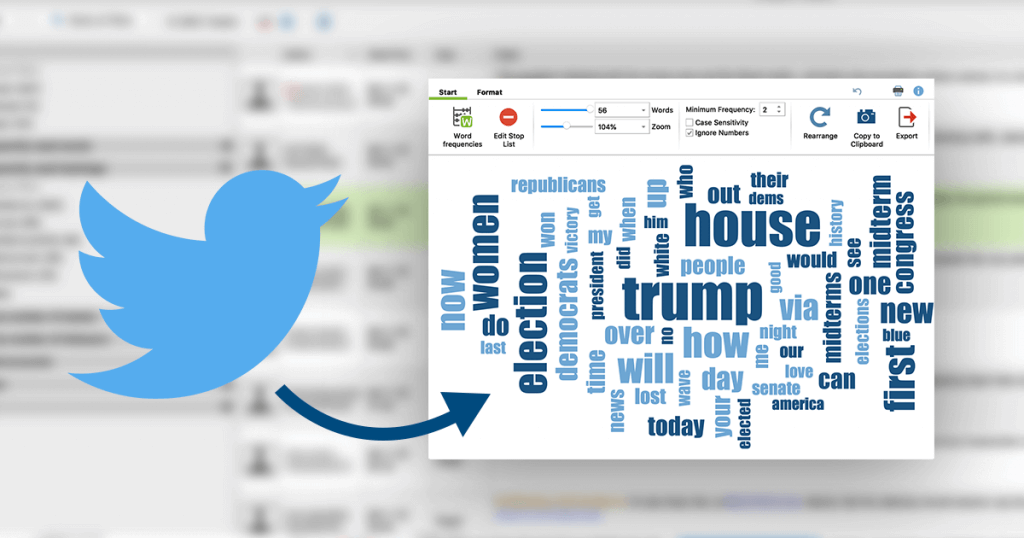

In other words, we will explore the latent topics for tweets that are talking about AI and Machine Learning. Note: (1) To avoid flooding ourselves with the tweets on the Internet, I will collect the live tweets filtered by the hashtags #AI, #MachineLearning. A real-time analysis to explore the underlying topics and the sentiment for live tweets.

0 kommentar(er)

0 kommentar(er)